Is the next crunch in the AI race the lack of data center space?

With an estimated 4.5 million NVIDIA H100s coming online in 2023 and 2024, the current and planned data center capacity may struggle housing all these GPUs.

This week, NVIDIA published its financial results for the fourth quarter of 2023. The company reported a significant increase in revenue to $22.1 billion, up 265% from a year ago, driven by strong sales of AI chips for servers, particularly the NVIDIA H100 GPU. The market continues with strong demand for the NVIDIA GPUs with big tech companies announcing large investments in 2024 (Meta acquiring 350k H100s in 2024) and lead times for the H100 still between 3 and 4 months.

A lot of the focus of the market has been on the supply and demand imbalance for the NVIDIA chips but not much thought has been put in where will these chips be placed? Do we even have the infrastructure to support and maintain all these GPUs running?

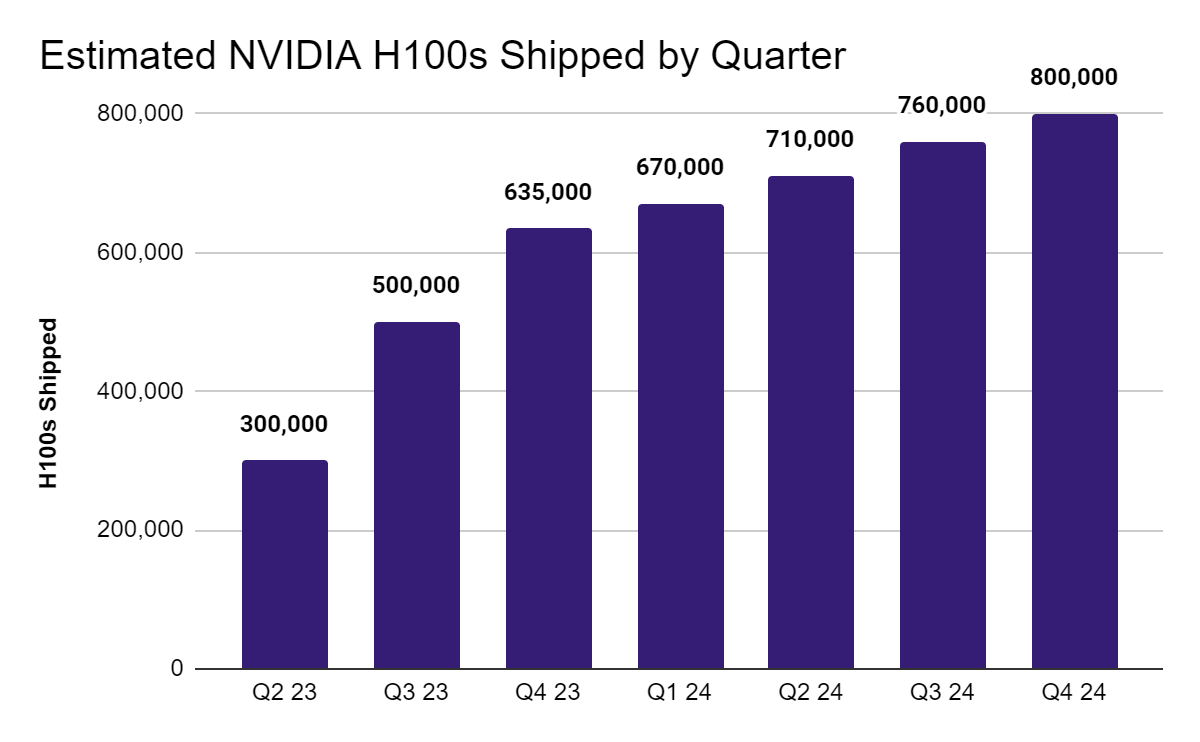

If we extrapolate previous reports to the financial results published this week, NVIDIA could have shipped around 630,000 H100s in Q4 2023. This would bring the total of H100 shipped in 2023 to around 1.5 million. Moreover, if we use the market's expectations for NVIDIA revenue to forecast 2024 deliveries, NVIDIA could be shipping around 3 million H100s in 2024. So between 2023 and 2024, data centers around the world would need to absorb around 4.5 million H100s. Is there enough existing and planned capacity for all of this?

Source: OMDIA, NVIDIA Financial Reports, Own Calculations

Energy Consumption & Data Center Capacity

According to some sources, a 22,000 H100 supercluster can draw up to 31MW of power. Extrapolating this means that the forecasted 4.5 million H100s coming to market in 2023 and 2024 would consume an astonishing 6.2 GW, more than double the data center capacity of Northern Virginia (world’s largest data center market).

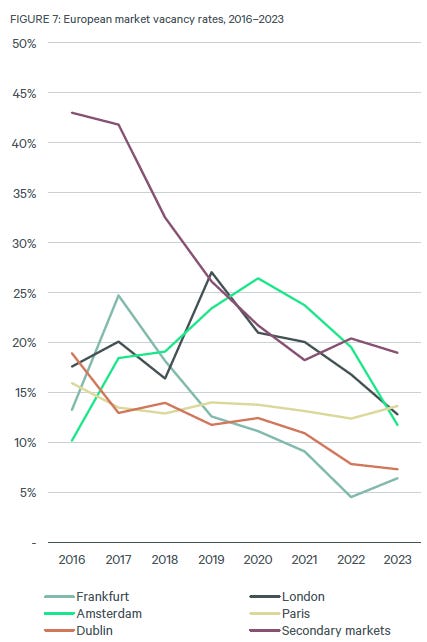

In a report by Cushmans and Wakefield, just in 2023 an estimated 7.1 GW of new data center capacity was expected to come online. However this also needs to account for existing demand from traditional workloads. Considering this new increased demand, it’s no wonder that the data center vacancy rates have been plummeting as can be observed in this report on the EU data center market.

Source: CRBE Research - European Data Centers - Q4 2023

Currently, data centers worldwide consume around 1 to 1.5 percent of the entire world’s energy output. However, in certain markets this is much higher and governments have started to take action to curb the development of new data centers. For example, in Ireland, data centers consumed 18% of the country’s electricity in 2022, up from 5% in 2015. As a result, the Irish government has stopped providing new connections to data centers in Dublin at least until 2028. Similar curbs on new data centers have been put in place in the Netherlands and Singapore. It’s no wonder why data center builders are reporting access to sufficient power as a major blocker to bringing new data centers online.

Moreover, retrofitting existing capacity to service these new servers coming online can also present a big challenge. Because of the high power consumption of the NVIDIA H100s, data centers have to have different power management equipment in place and need more / different cooling setups and bigger racks. With lead times on some of these equipment in 12+ months, it won’t be easy to retrofit existing space either.

With both a large increase in demand for data center capacity and supply woes, the next possible crunch in the AI market could very well be in the space and equipment required to house all the GPUs coming online. With hyperscalers reportedly taking 70-80% of all new data center capacity coming online, finding space to place all these H100 is certainly going to be a challenge where partnerships, good connections in the industry and long & large contracts, will play a big role in securing capacity.

So What?

Well personally it just fascinates me to see what is happening with the AI market on all fronts and certainly the data center space is a big one to have an eye on going forward. However, we can certainly start looking out there who benefits from this crunch. Some few clear candidates from the equipment side include Supermicro (providing the physical servers), Schneider Electric, Eaton, Vertiv and nVent. From the data center side existing players like Equinix or NTT could very well end up benefiting from this market dynamic.

And well, if you’re in the lookout for bringing some GPUs online, start planning where these will be hosted with some good time in hand and/or contact those that may have the capacity already booked and can provide it to you.

If you enjoyed this content subscribe to my Substack, will continue writing around tech, AI, startups & VCs.