LLM Token Economics - Not All Tokens Are Created Equal (Part I)

Exploring the economics of LLM: A guide to understanding pricing, token variability, and their impact on AI model costs.

Large Language Models (LLMs) are quickly becoming a key part of many tools we use every day and are essential in building new tech products. As they grow more important, it's crucial for us to understand how they're priced. This means getting to know the 'economics' of using these powerful AI models. In this first part of LLM Token Economics we will explore the basics of how LLMs are priced through the use of “tokens” and delve into considerations that need to be kept in mind.

Tokens have become the fundamental block for input and output data. To get a good understanding how the pricing in these services works, we need to get an understanding of what constitutes a token, the different types of tokens and the differences in tokens between LLM models. Not always when comparing pricing per token we’re comparing apples to apples. We finish this first article on LLM Token Economics with an example putting the different concepts into practice.

So what is a token in the context of LLMs?

Token in the context of LLMs means a chunk of text that the model can read (input) or generate (output). This chunk of text can range from a single letter or character all the way to whole words. LLMs understand the text we write as a sequence of tokens strung together and output also a sequence of tokens strung together.

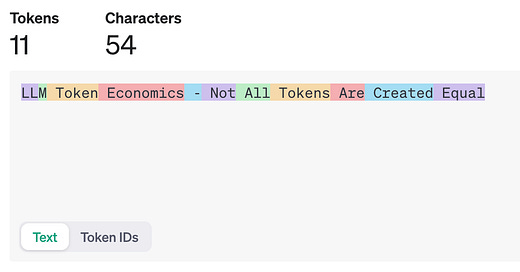

For example, using OpenAI’s tokenizer playground1 we can understand how OpenAI’s models (GPT-3.5 and GPT-4) tokenize a piece of text and the total count of tokens in that piece of text.

You can try it yourself here: Link

Not All Tokens Are Created Equal - The Differences to Consider With LLM Tokens

Now, the not so great news. When trying to compare pricing on tokens between providers of LLMs there’s multiple caveats that need to be considered. Tokens are priced differently not only depending on the model but also on what type of token it is. We also need to consider the possible quality of the token being generated even when using the same model.

Tokenization differences between models

LLM tokenization can vary from one model to another. What one model considers a single token could very well be two tokens on another. What this means is that comparing the pricing of one model against another by the ‘price per token’ won’t always yield an apples to apples comparison.

For example, we can go back to the text we previously saw when looking at what a token was. In the case of OpenAI GPT3.5 & GPT4, the title of this article would have 11 tokens and 54 characters.

Meanwhile, if we consider the same example using Meta’s Llama 2, we would find that the model would consider the title to have 16 tokens instead of the 11 tokens of OpenAI’s models.

OpenAI GPT 3.5 & 4

Google Gemma

Meta Llama 2

How many more tokens one model produces over another is important to consider for cost comparisons. Sadly, there’s been few studies regarding this topic so it’s an area that is still to be explored. However, as a point of reference, Anyscale2 found that Llama 2 counted ~19% more tokens than OpenAI’s own models, so if you’re comparing pricing between the two models’ that would need to be a factor to consider.

Input and Output Tokens

LLM providers may price differently input tokens and output tokens. For example in the case of OpenAI they price their GPT-4 Turbo at $10 per 1 million input tokens and $30 per 1 million output tokens. A general rule-of-thumb is that one token in OpenAI equates to 3/4s of a word so 1 million tokens would roughly be 750,000 words (or ~1,500 pages).

Pricing differently between input and output may not always be the case. For some providers of LLM-as-a-Service they price both input and output at the same price. For example here we can see the pricing for Mistral’s Mixture of Experts, what’s considered at this moment as one of the best open source LLMs.

Mistral’s Mixture of Experts 8x7B Model Pricing:

Model Type and Performance

Also note that we need to be careful even when comparing the listed per token pricing of different LLM-as-a-Service providers. Even when selecting the same model, the output quality may vary. This can happen if the provider is using a “quantized” version of the model which makes it less expensive to run. Quantization involves making the LLM run more efficiently, using less memory and computational resources, in exchange of reducing the quality of the answers. In the end, two providers offering for example Mistral’s Mixture of Experts model could very well be offering different quality of outputs (and with little transparency at that).

Unfortunately, it’s not that easy to spot if a model has been quantized without doing the homework. For these cases, some claims like Together AI’s claim3 of not quantizing models could be of some worth.

For more information on quantization: Link

Additionally, it’s also important to understand the performance of LLM-as-a-Service providers in terms of their latency output. If your use case may be latency sensitive providers speed to output the first token and the # of tokens generated per second may be of importance for you. Here we can see differences in throughput where Groq clearly leads the pack:

https://artificialanalysis.ai/models/mixtral-8x7b-instruct

Example - Klarna Automating 700 customer support roles with LLMs

To better understand this topic let’s do an example using Klarna’s case as a base. Klarna recently announced that it had automated the work of 700 full-time agents using LLMs. They mentioned that during the month-long trial the AI assistant had 2.3 million conversations. Let’s have the following assumptions:

From Klarna’s PR release: There were 2.3 million conversations in a month.

Key assumption (mine): Each conversation had an average input length of 1,000 tokens and an average output length of 1,000 tokens. To which extent this is accurate is a matter for debate for visualisation, 1,000 tokens roughly represent 750 words (~ 1.5 pages). For example, below an imaginary set of instructions for the LLM using GPT-4:

Based on this, we can estimate the approximate cost to Klarna of using OpenAI’s model.

Now, compare $92k per month to the cost of having 700 full-time employees doing customer support. Even if we double the average tokens per conversation, the cost per month would still be pretty low (each support employee would need to cost Klarna $262 per month to break even with 2k tokens).

Now imagine if Klarna had used an open source model (Mixtral 8x7B) and had used for example Anyscale as their LLM-as-a-Service provider. Let’s assume here Mixtral uses ~15% tokens when tokenizing text than OpenAI’s model (ran the Mistral tokenizer on the blog’s title and got 14 tokens vs OpenAI’s 11).

In this case, the cost to Klarna would be substantially lower. Now a trade-off analysis between the quality vs cost between the two models would need to be done to more accurately make a decision. Regardless, in both cases, LLMs are likely a fraction of what 700 full-time employees would be costing Klarna each month.

Conclusion

Understanding the economics behind the use of LLMs is important to make informed decisions in its implementation. Here we explored the nuances that need to be accounted for when considering the pricing with tokens.

In our next take we will explore the difference of hosting the models yourself and the unit economics of providers of LLM-as-a-Service.

If you enjoyed this post I invite you to subscribe to my blog. I will continue writing around tech, AI, startups & VCs.

https://platform.openai.com/tokenizer

https://www.anyscale.com/blog/llama-2-is-about-as-factually-accurate-as-gpt-4-for-summaries-and-is-30x-cheaper

https://www.together.ai/blog/together-inference-engine-v1